- Product

- AI coaching companion for League of Legends players

- Goal

- Deliver personal, actionable feedback players can use between matches

- My role

- Product designer: UX, insight delivery system, LLM output formatting

- Team

- Me + 2 developers + data scientist

- Constraints

- 3 weeks; zero LoL knowledge; scope cap to stay lean vs. established stat tools

- Shipped

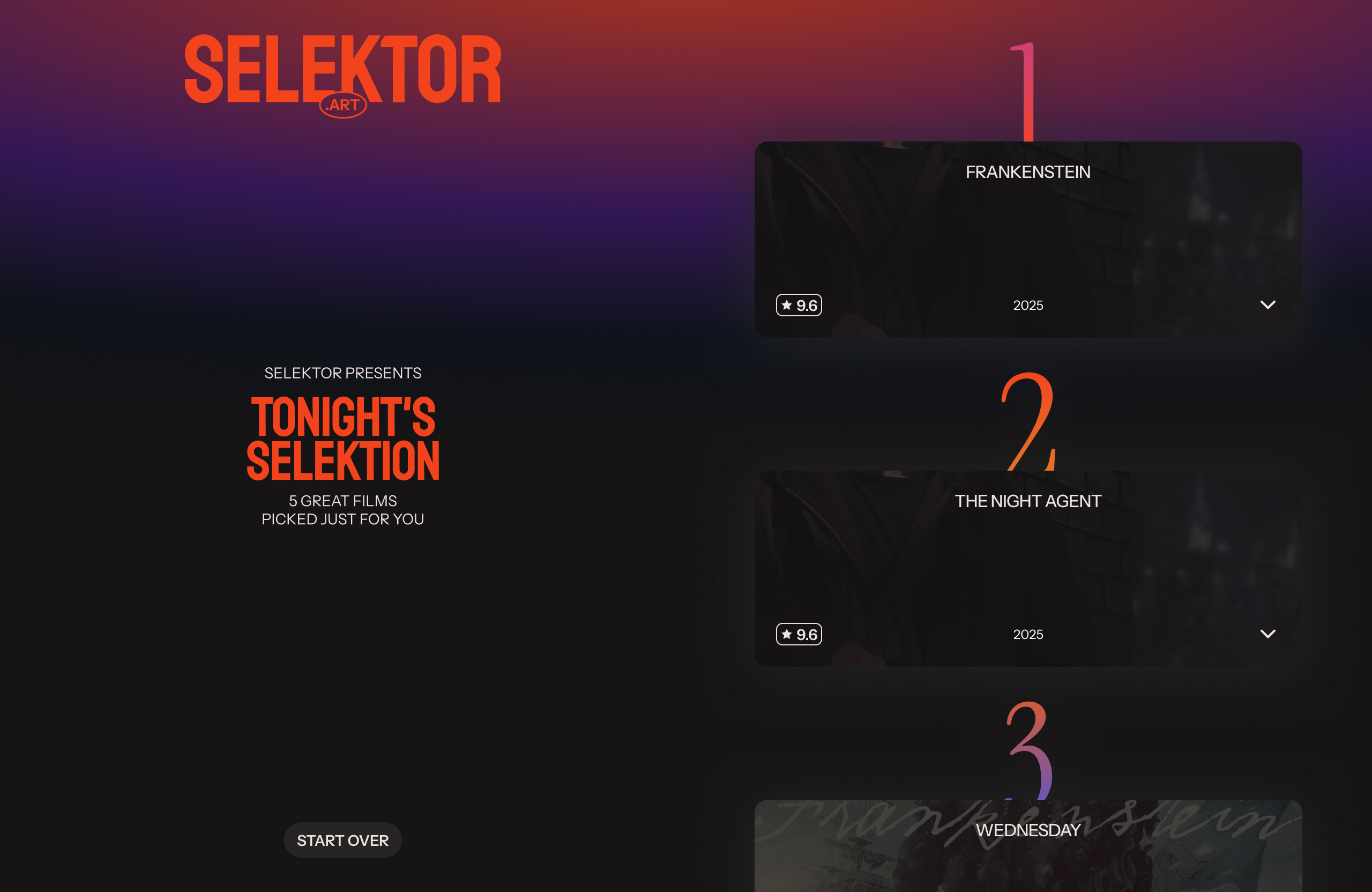

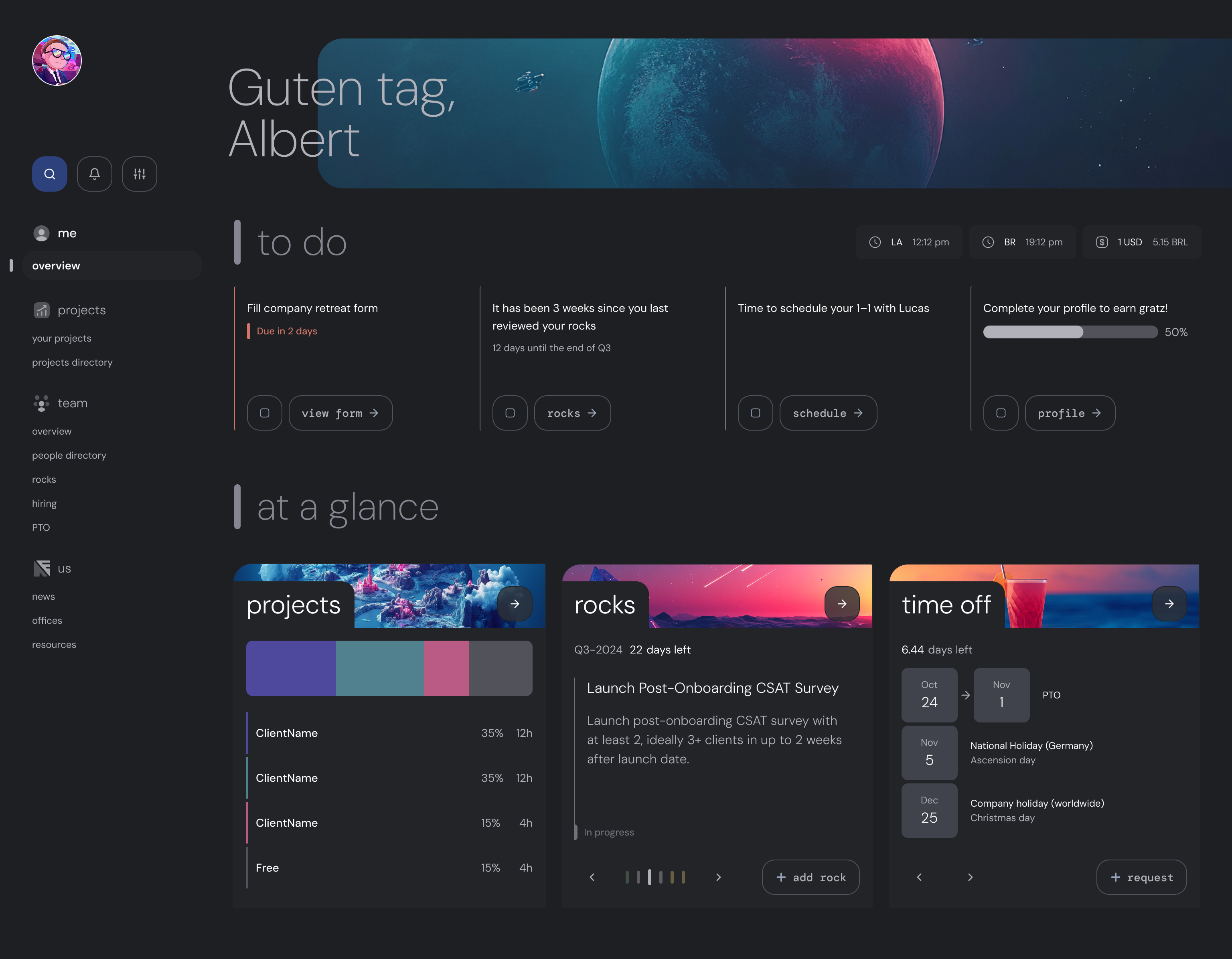

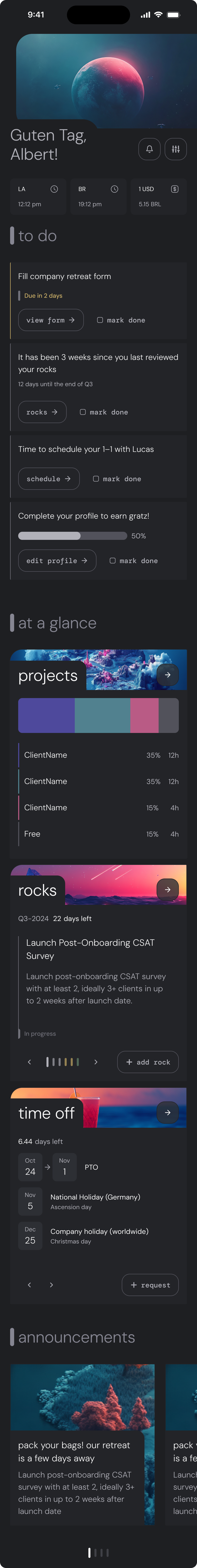

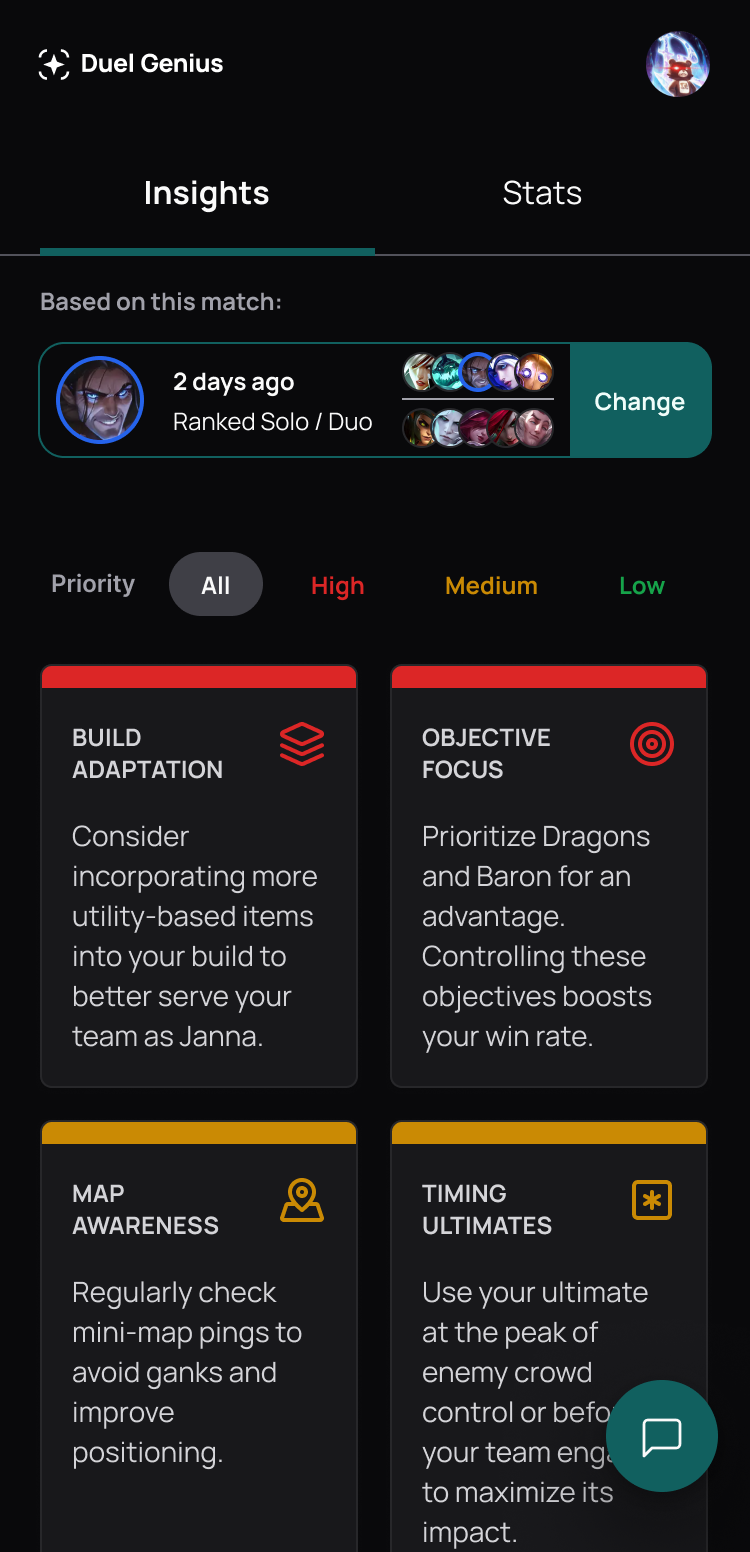

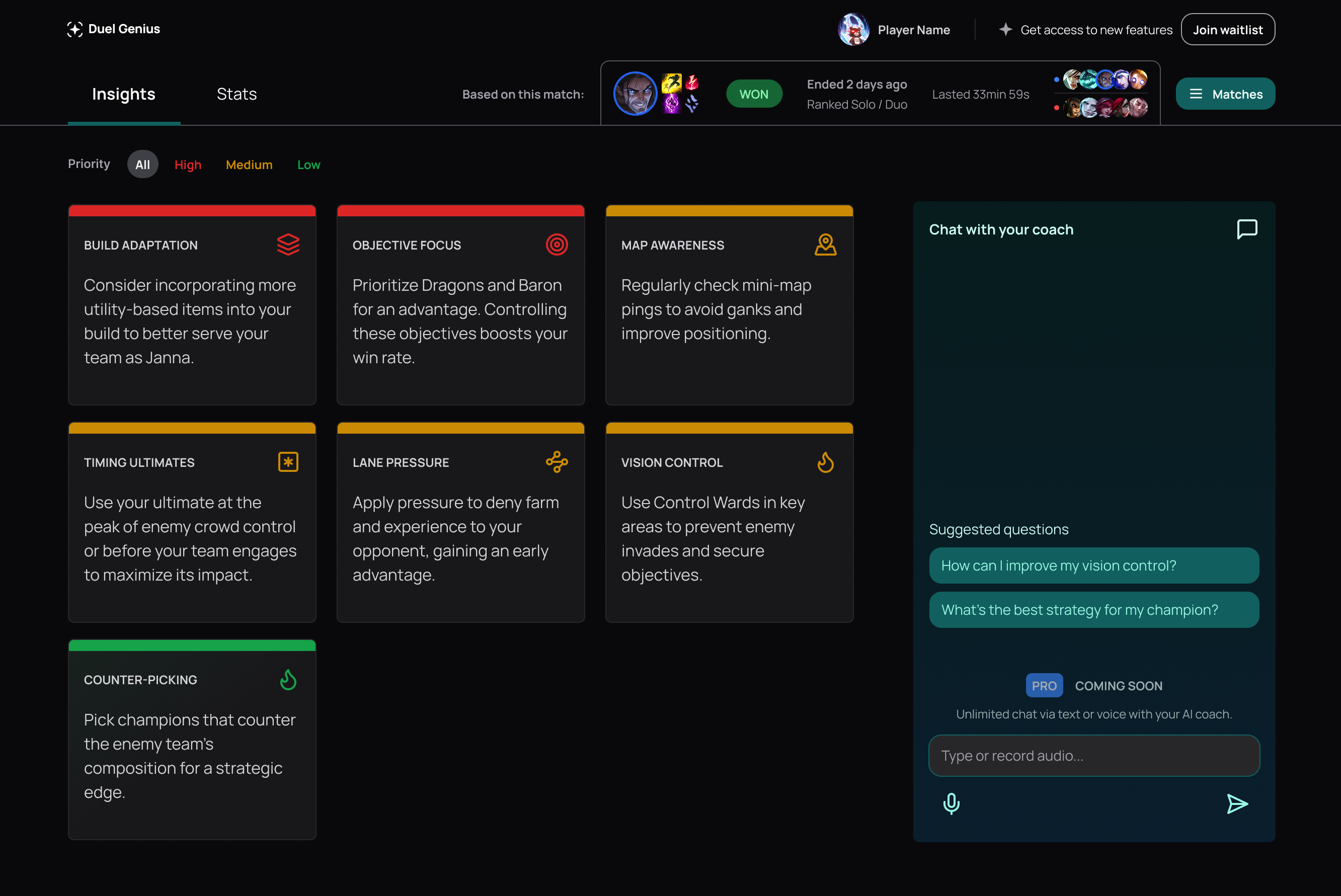

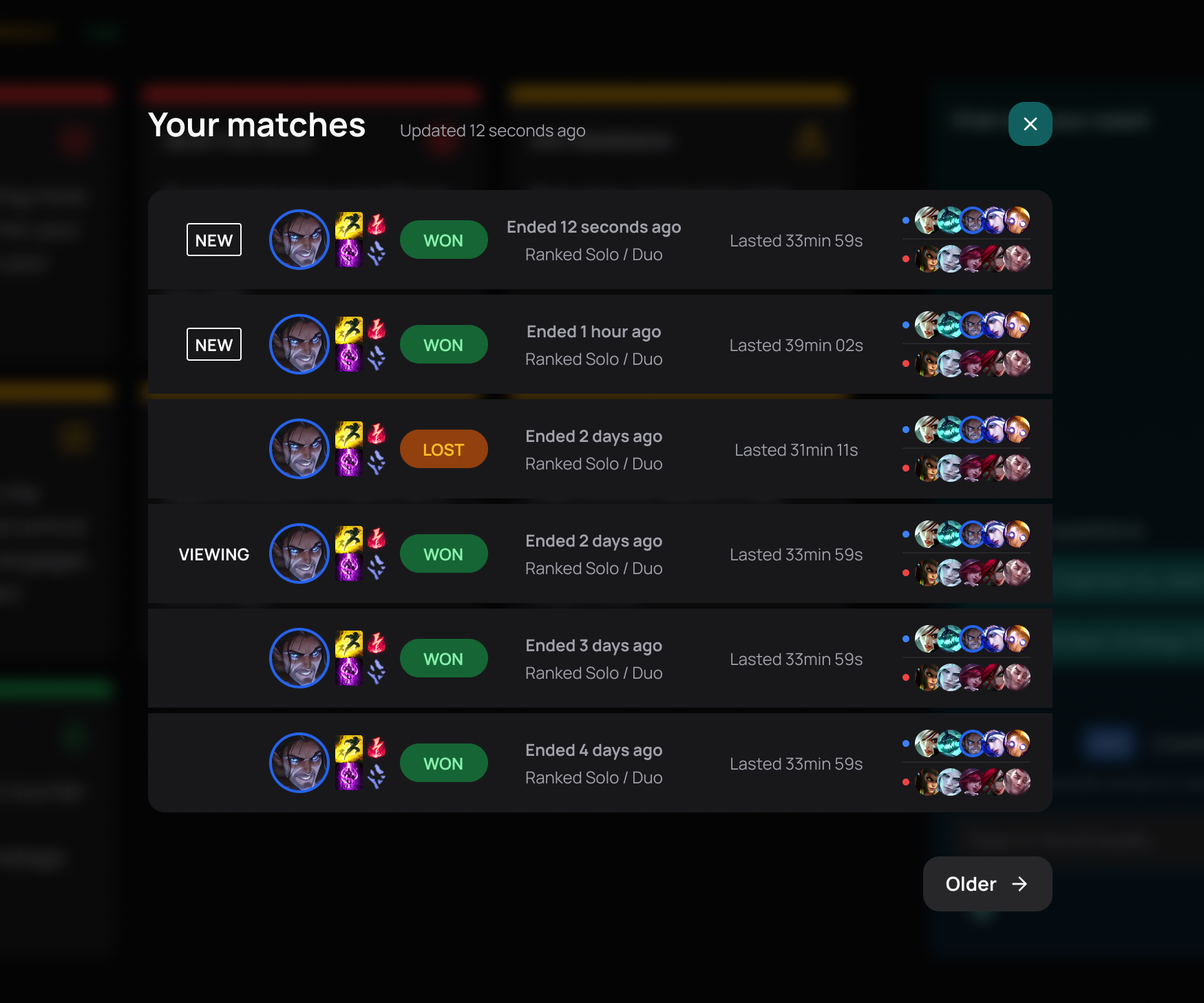

- AI insights dashboard, scannable card system, expandable modals, chat as secondary

- Result

- Serious amateur players engaged immediately; clarified target audience

→ Context

I worked on a 3-week project with a small team of developers and a data scientist to design and build a companion app for League of Legends players who take improvement seriously.

I came in with zero LoL experience and had to learn the language, the decision-making, and the way players already read stats. Luckily, two teammates were real LoL players, which gave us strong instincts for what belongs in that universe.

We built an AI-powered product, but chat was never the center piece of the experience.

→ Core challenge

Most competitive gaming advice is generic. The problem is making feedback personal and actionable fast enough that a player can use it in their next match.

We designed the experience as a pre-game coach-to-athlete moment: a targeted, streamlined interface for quickly getting useful insights that can be applied in the next match.

→ Key decisions

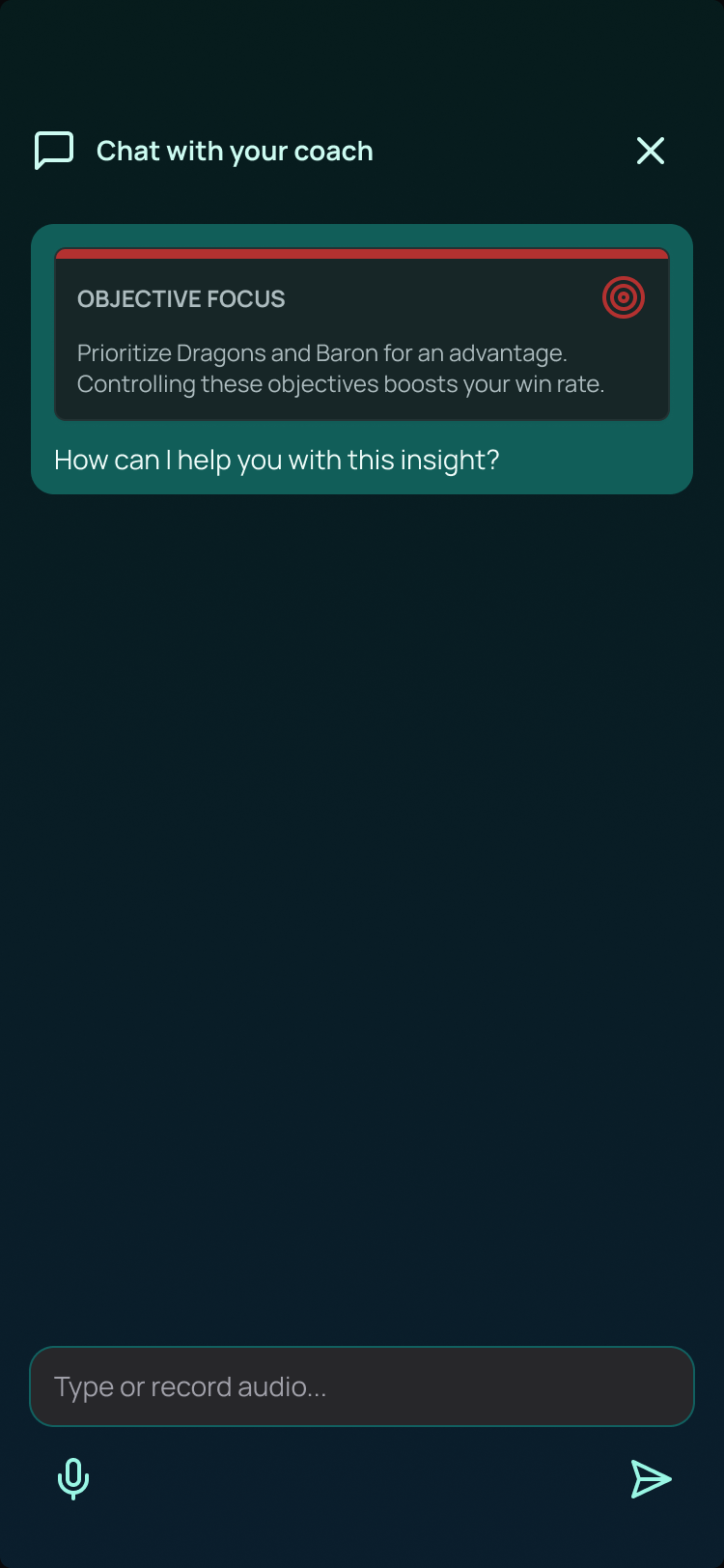

We avoided a “one big chat” interface and designed an insight delivery system instead:

- Insights as limited cards (not an endless thread)

- A cap on how many insights appear at once

- Visual hierarchy that makes the high priority insights obvious

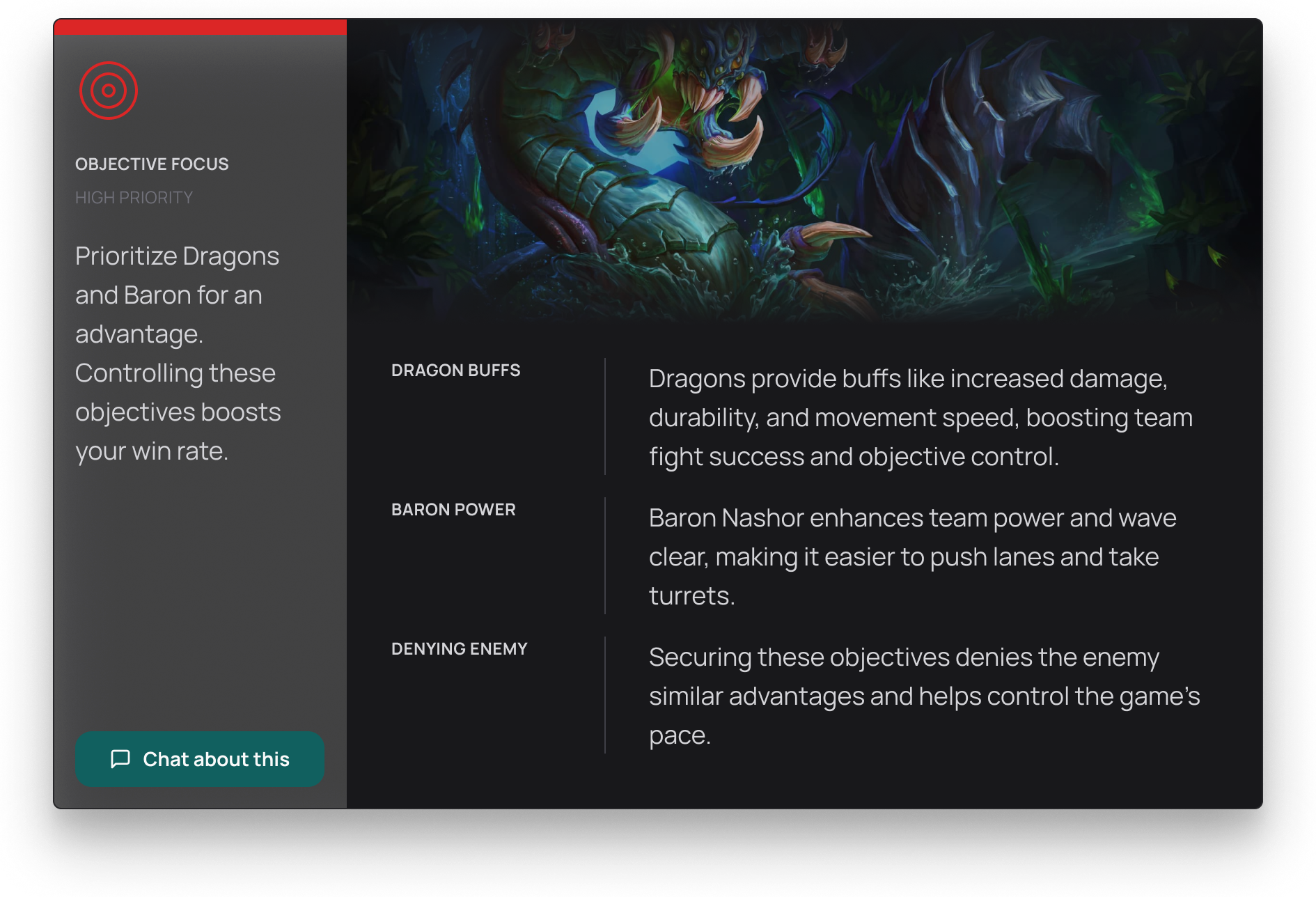

- A modal to expand one insight when needed

- A chat sidebar as secondary, not the core

This was a direct response to the biggest AI UX failure mode: excessive output. Even good advice is useless when it takes too long to parse.

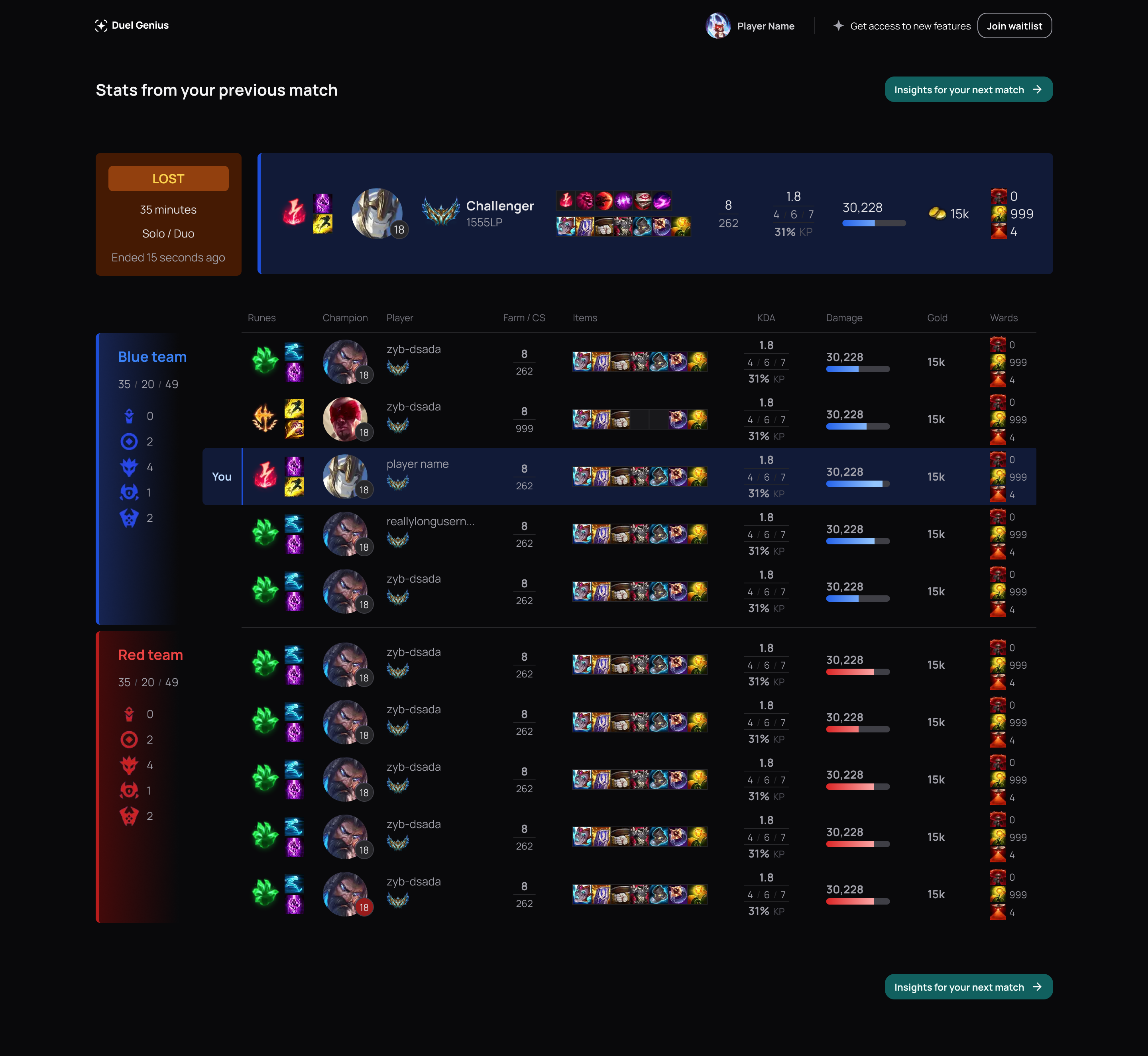

We made a deliberate scope call: don’t try to be all things to all people.

Gamers are used to specific stat conventions from official dashboards and a small set of dominant tools. We couldn’t offer “all the stats tools” plus AI insights and stay lean.

Players could keep using existing tools for deep stats and use our app for personalized coaching.

→ Making LLM output scannable

A big part of the design work was shaping raw AI output into something readable under pressure:

- Clear labeled sections

- Small, digestible chunks of text

- One primary action per insight

- Visual hierarchy that makes the “what to do next” obvious

This is where the product went beyond “AI chat”. The interface did the work of turning generated text into coaching.

→ What we learned

Amateur players (3–7 days/week) liked the insights immediately because they were personal and easy to digest. Pro players were more skeptical because they already operate inside high-quality feedback loops (coaches, teammates, review routines).

That clarified the target: serious amateurs who want coaching but don’t have it.

Also, we learned that, for our MVP, we didn’t need heavy fine-tuning to deliver useful insights. LLMs were able to generate insights that were useful and actionable with minimal prompt engineering.

→ What I’d do next

More observation-based testing in the real between-match context, and tracking whether players actually apply one insight in the next game and come back for more.